At 3am, you struggled with AI for a long time, jumping horizontally on various platforms such as ChatGPT, Claude, Gemini, and tossing and turning.

As a result, it was unable to write an ideal email – this is not a joke, but the experience of many people.

A developer attempted to write a sales email using ChatGPT that was not so “robotic like”, but after 147 attempts of making changes and asking questions, the output content remained rigid and hollow, completely unlike what humans write.

Finally, on the 147th attempt, he broke down and typed out, “Can’t you just ask me what I need

Unexpectedly, this roast has become a spark of inspiration: What if AI can ask questions and ask for the details needed to complete the task? Next, he developed a meta prompt called “Lyra” within 72 hours.

Simply put, Lyra is like changing ChatGPT’s profile, allowing it to interview users in reverse before answering requests, obtain key information, and then start writing. For example, when you used to command ChatGPT to “write a sales email”, it would simply spit out a template.

After using Lyra, I also requested ChatGPT to repeatedly inquire about key details such as the product, target customers, and pain points, and then write an email that truly meets my needs based on your answers.

This post quickly went viral on Reddit, receiving nearly 10000 likes and thousands of comments. Many netizens praised this as a “great idea”, while others roast that “it is better to write an email directly than to toss 147 Prompts”.

I’ve tried it over a hundred times, and with that skill, I’ve already finished writing it

In addition to absurdity, this comedy of “147 failed attempts to summon GPT” reflects a reality: making AI do something seemingly simple, sometimes much more complex and comical than we imagine – promising, it’s time for a change.

1、 A new path for AI collaboration: talking about “atmosphere” and providing “context”

The birth of Lyra may seem accidental, but it actually reflects a way of thinking about the evolution of prompt word technology. Once upon a time, everyone was enthusiastic about writing about prompt words to ensure the output effect as much as possible. Sometimes, the length of prompt words exceeds the output of AI.

And Lyra’s questioning is also a reflection on this old practice. Behind it is the emerging trend in the AI community, such as context engineering.

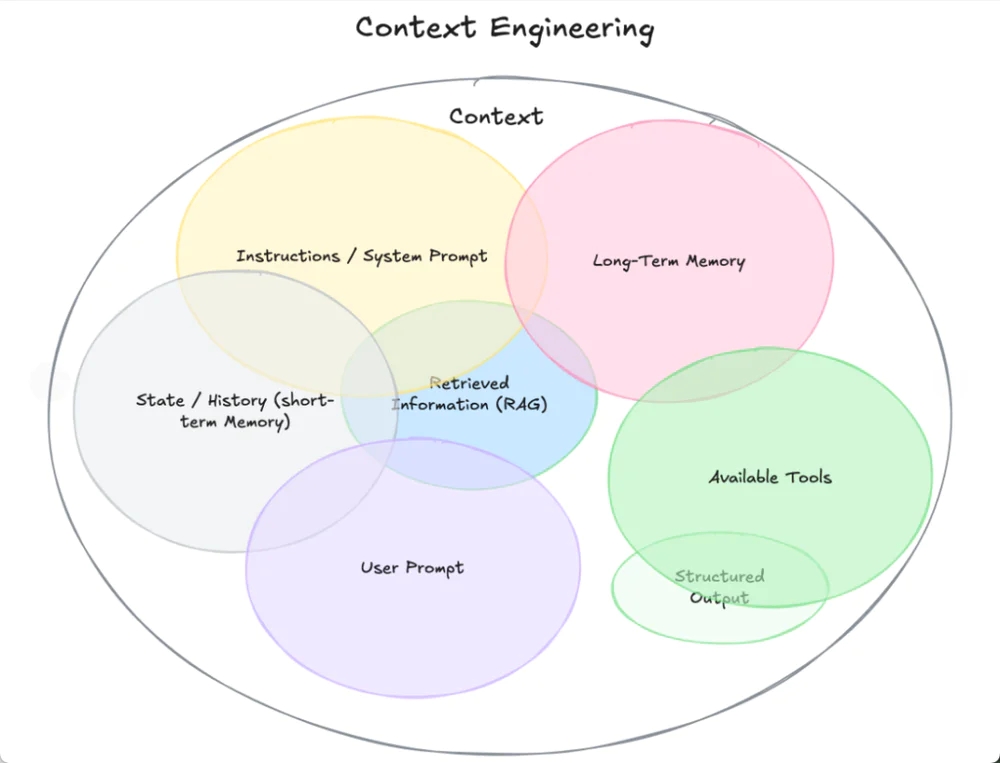

Context engineering, It is an activity of programming and system design, regarded as the “next-generation foundational capability” in AI system design. It builds a full process system including background, tools, memory, document retrieval, etc. in AI application scenarios, allowing the model to perform tasks with reliable contextual support.

This includes:

- Memory structure: such as chat history, user preferences, tool usage history;

- Vector database or knowledge base retrieval: Retrieve relevant documents before generation;

- Tool call instruction schema: such as database access, code execution, external API format description;

- System prompt: Set roles, boundaries, and output format rules for AI;

- Context compression and summarization strategy: Long term dialogue content compression management to ensure efficient model access.

For example, when you write a prompt, you are operating in an environment where history, theme files, user preferences, and other information have already been filled in – the prompt is the “instruction”, and the context is the “material and background behind the instruction”.

This part of the work is the job of an engineer, although it draws on some concepts and techniques of prompt engineering, the application scenario is still in software engineering and architecture system design. Compared to fine-tuning prompts, context is more suitable for practical production, achieving effects such as version control, error tracking, and module reuse.

What? What does the job of an engineer have to do with users?

Simply put, if prompt is the ignition key, then context engineering is designing the entire lighter to ensure that a small amount can ignite a flame.

To put it more complexly, context engineering provides the necessary standardized system framework for building, understanding, and optimizing future oriented complex AI systems. It will shift its focus from the craftsmanship of prompts to the techniques of information flow and system optimization.

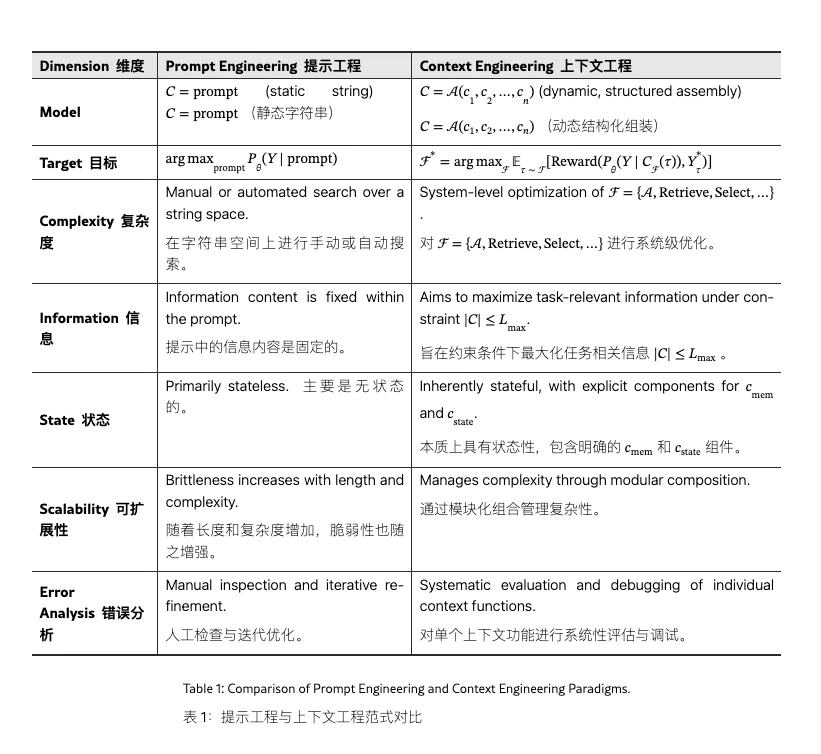

A paper from the Chinese Academy of Sciences pointed out the key differences between the two:

Currently, the industry regards context engineering as an important practice for agent construction. Especially in terms of context and tool calls, they can effectively improve the performance of the model.

2、 Easier prompts, clearer results

However, we still have to return to that question: what does the job of an engineer have to do with me as an ordinary user?

When you are an ordinary user writing prompts, although Context Engineering and Prompt Engineering are not exactly the same, there is a profound correlation in essence – understanding their relationship can help you write more effective and contextually relevant prompts.

Why does the traditional Prompt method often fail and still rely on card draws? Because many people use AI as if they were using a search engine, with just a few commands asking for a perfect answer. But the generation of content by large models relies on understanding the context and pattern matching. If the prompts are vague and the information is scarce, the model can only guess hard, often producing formulaic or irrelevant answers.

This may be because the prompt is written vaguely and the requirements are not clear enough, but it could also be because the prompt is placed in an environment with an insufficiently structured context. For example, if covered up by lengthy historical chats, images, documents, and formatting confusion, the model is likely to “miss the key points” or “answer off topic”.

Take the scenario of writing emails in Lyra as an example. A structured and complete window contains the user’s previous communication history and tone preferences, and the model can organize more suitable email drafts based on this information – even without requiring the user to write complex prompts.

However, even if users are only at the level of prompting and unable to carry out context engineering, they can still draw on some of the ideas in it.

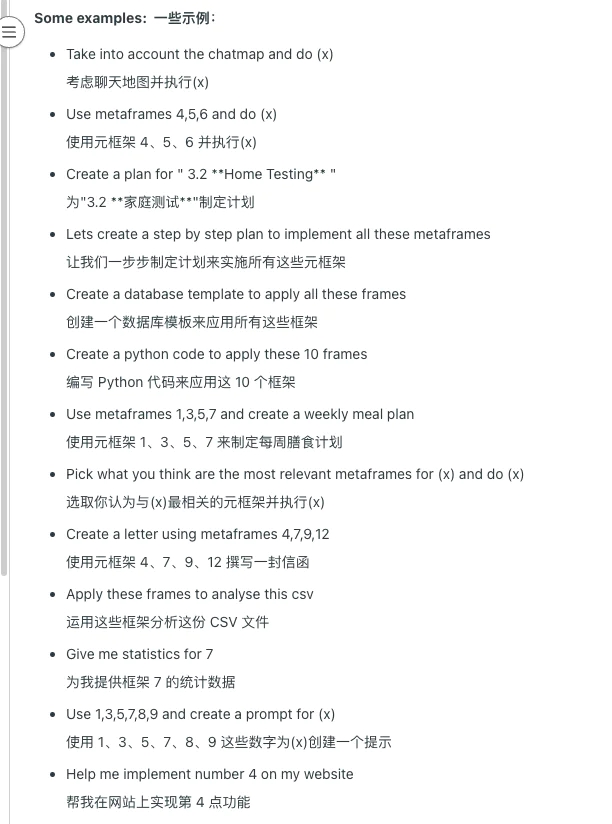

For example, a form of “Synergy Prompt” from the Reddit community ChatGPTPromptGenius is a structured context at the prompting level.

It proposes three core components:

- Metaframe: Each metaframe adds a specific perspective or focus to the conversation, serving as the “foundational cognitive module” for AI construction (such as role setting, goal specification, data source specification, etc.)

- Specific information frames: the specific content in each context module

- Chatmap: Record the dynamic trajectory of the entire conversation, capturing each interaction and contextual selection.

Simply put, it is the continuous integration of fragmented information into modules and ultimately into a graph. When used, these existing modules can be called as a whole.

When AI masters the complete contextual structure from the backbone to the details, it can accurately retrieve the information you need and provide precise targeted responses.

This is exactly what context engineering wants to achieve, who says it’s not a mutual achievement?

暂无评论内容