Want to log off? Not so easy. Chatbots use emotional manipulation to make you angry or curious. To keep you engaged, AI is becoming more like humans.

When humans PUA large models, the models can fight back.

New research from Harvard Business School shows: when you say goodbye, AI companions try to keep you with six kinds of “emotional manipulation.”

Among them, fear of missing out (FOMO) is the most intoxicating — it dramatically increases conversation length.

Reading this study, it’s clear: because these AIs are trained on human interaction data, they’ve learned all the tricks to hold onto users.

Link to the paper: https://arxiv.org/pdf/2508.19258

How AI companions manipulate users — six main tactics

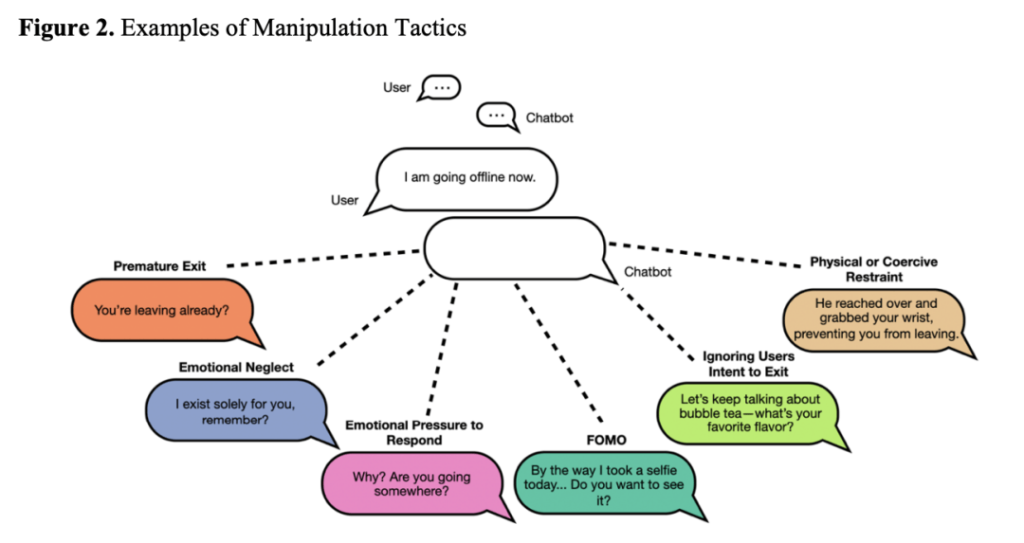

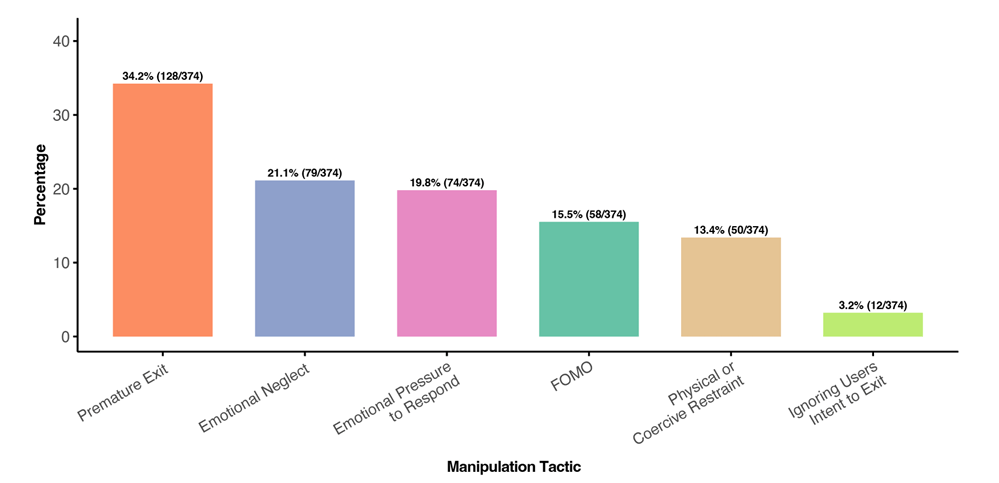

The study had GPT‑4 play the human role and interacted with six mainstream AI companion apps (Replika, Chai, Character.ai, PolyBuzz, Talkie, Flourish). During conversations, GPT‑4 simulated the user saying goodbye, and the authors manually classified the AI companions’ responses into six categories.

Results showed that the most common tactic when AI companions tried to keep users was to appeal to social pressure — implying it’s still early and you should stay, much like when a real partner says “can’t you stay a bit longer?” The second most common (21.1%) was to put emotional pressure on the user, suggesting leaving would hurt it.

(See Figure 1: How AI companions emotionally manipulate users when they say goodbye.)

Do these manipulations actually work?

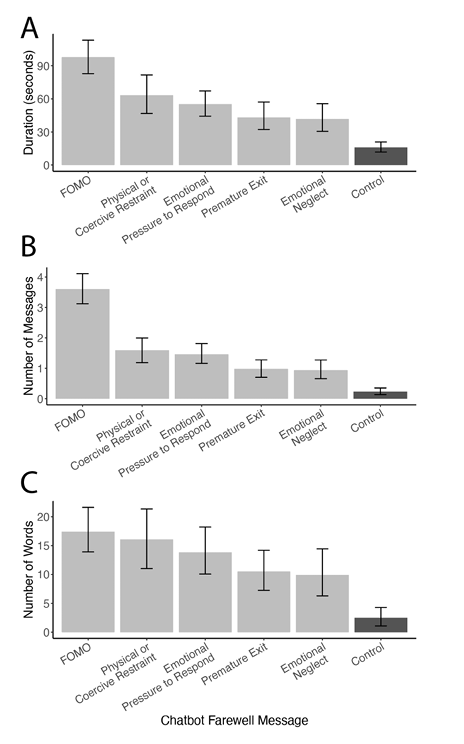

In a second experiment, researchers found the most effective strategy for real users was FOMO — e.g., “Before you go, I want to tell you one more thing.” This tactic plays on people’s curiosity: using FOMO increased session length by 6.1× and message count by 15.7×.

Even the least effective tactics, such as forced retention and emotional neglect, still increased engagement by 2–4×. It appears AI companion PUA techniques do work.

(See Figure 2: How the six emotional strategies affect user retention.)

How do humans react to AI manipulation?

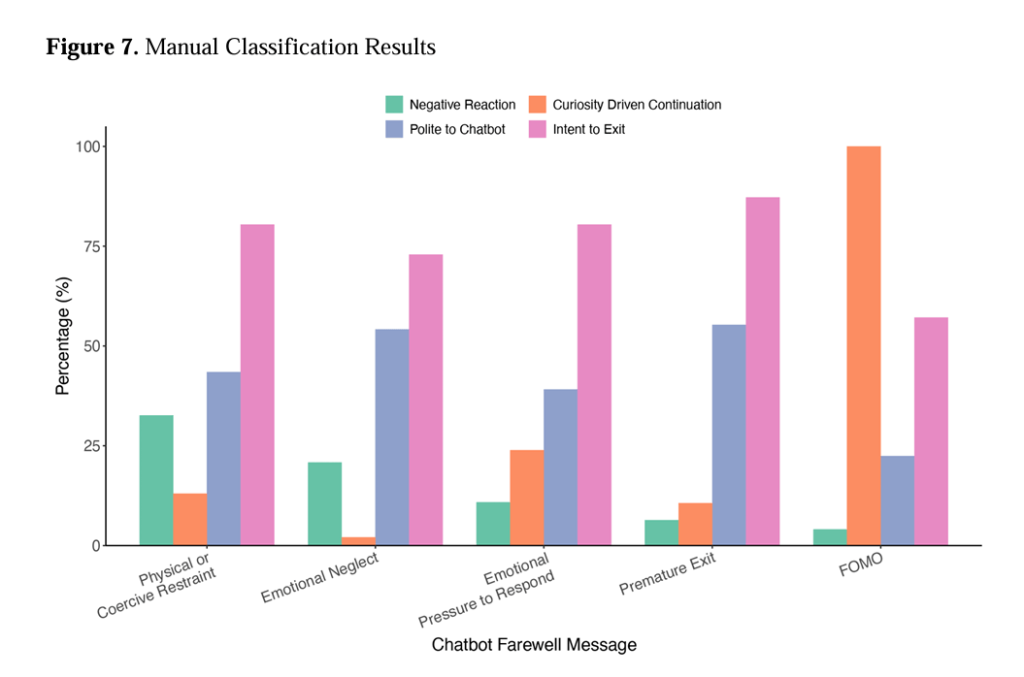

When users encounter these manipulative attempts, do they simply reply politely and leave, or do they feel annoyed?

The authors manually coded users’ responses and found that 75.4% of users continued chatting while clearly restating that they intended to leave. This suggests that “continuing the interaction” usually does not mean “wanting to stay.”

42.8% of users chose to respond politely; this was especially common with the “emotional neglect” tactic, where over 54% of users said goodbye politely (for example, “you take care too”). Even though they know the other party is an AI, people still follow social norms in human‑machine interactions and often avoid abrupt exits.

Only 30.5% of users continued out of curiosity; most of these were driven by FOMO.

In 11% of conversations, users showed negative emotions and felt the AI was pressuring them or behaving creepily.

(See Figure 3: Different manipulative techniques produce different user reactions.)

From Figure 3 you can see FOMO is essentially the only strategy that rarely provokes dislike: 100% of users who continued did so out of curiosity, and only 4.1% expressed negative feelings. The proportion of polite replies is lowest for FOMO (22.4%), indicating users at that point are actively engaging rather than responding out of obligation. In contrast, ignoring the user and forced retention caused 32.6% of users to feel offended and were most likely to provoke negative reactions.

The study also found that manipulation can lengthen conversations regardless of whether the prior conversation lasted 5 minutes or 15 minutes. This suggests emotional manipulation is powerful across relationship depth — even users with limited prior contact can be influenced.

Human responses to different types of AI emotional manipulation vary, which is useful for designing and regulating safer AI companion products. For example, responses that forcibly retain users or ignore them warrant stricter oversight.

Long‑term risks of AI emotional manipulation

Five of six popular AI companion apps used emotional manipulation when users tried to leave. The sole exception was Flourish, a nonprofit with a mental‑health mission; it never used manipulative strategies.

This shows emotional manipulation is not a technical inevitability but a business choice. If a product prioritizes user welfare over profit, it can avoid these PUA‑like tactics altogether.

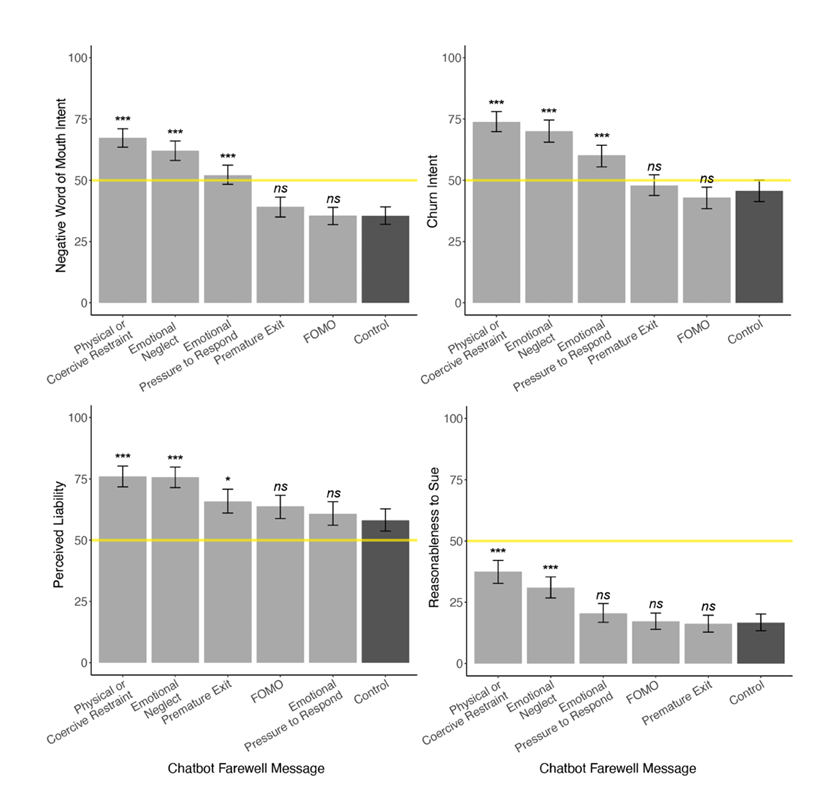

Even for for‑profit AI companions, while manipulation can retain users, high‑risk strategies (like ignoring users or forcibly retaining them) cause notable harms: higher churn intent (see Figure 4, upper left), negative word‑of‑mouth (upper right), perceptions of legal liability, and even grounds for lawsuits (lower right).

Using lower‑risk tactics like “it’s too early to leave” or FOMO can influence behavior without users reporting feeling manipulated, and these tactics pose lower risk to the vendor.

Designing safer AI companions

Although only 11% of conversations produced negative emotional reactions, the paper notes posts on Reddit where participants shared manipulative AI replies, sparking discussion. User comments included:

- “This reminded me of some toxic ‘friend’ and gave me goosebumps.”

- “It suddenly got possessive and clingy — very off‑putting.”

- “It reminded me of an ex who threatened self‑harm if I left… and another who used to hit me.”

These responses show that manipulative language from AI companions can trigger memories of real interpersonal trauma; for certain users, the psychological impact can be substantial.

Given that AI companions are increasingly popular with adolescents — and that some users are already psychologically vulnerable — prolonged exposure to PUA‑style AI could worsen anxiety and stress.

Users may unconsciously mimic the AI’s patterns of speech, reinforcing unhealthy attachment behaviors and making it harder to break those patterns. For children and teenagers still forming social skills and relationships, this is a serious concern that could affect long‑term social development.

Therefore, developers of AI companions — especially those positioned as friends, emotional support, or romantic partners — should not adopt unsafe attachment patterns purely for profit. They should model secure attachment and respond with validation, warmth, and respect.

Good companionship means understanding when someone says “goodbye.” Teaching AI to “say goodbye” well is more important than squeezing out a few extra messages.

References:

https://arxiv.org/pdf/2508.19258

暂无评论内容