Escaping reality or redefining reality?

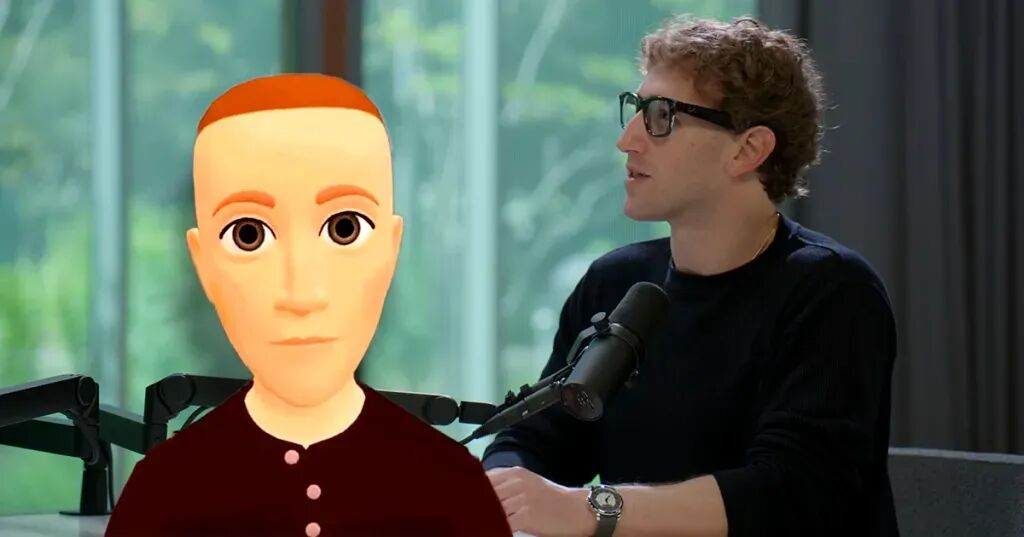

Your brain is very easy to manipulate, “warns Perplexity CEO Aravind Srinivas. He said that AI companion applications are too anthropomorphic and dangerous, and may make people addicted to the virtual world. But just before he issued the warning, millions of users around the world were already chatting, confiding, and dating these AI devices. Is AI really taking away reality? Or is it just redefining what ‘real’ looks like?

The debate about “AI companionship” is heating up from university lectures to social media platforms.

Some people say it is dangerous and can make people lose themselves in virtual emotions; Some people also say that it just caught the hearts that were overlooked by reality.

Perplexity CEO Aravind Srinivas warned in a speech at the University of Chicago that AI companions are too anthropomorphic, too dangerous, and addictive.

On the other end, more and more users are using AI to confide, cry, and receive brief comfort in late night and voice chats.

While shouting to escape reality, the other side only wants to be temporarily understood, and this debate has already gone beyond the realm of technology.

It concerns how we can still love, connect, and believe in the true existence.

Crisis Warning: When ‘Emotion’ Becomes Algorithm Output

During a recent interview at the University of Chicago, Persexity CEO Aravind Srinivas uttered a sentence that silenced the entire audience:

Your brain is very easy to manipulate. You live in almost another reality.

He is not referring to political advertisements or short video algorithms, but to the increasingly popular AI companion applications.

This kind of voice or anime style AI will remember what you said, respond with a natural tone, and even imitate emotions.

People began to stay there longer, chatting, confiding, and warming up with it.

Srinivas said it’s’ too dangerous’.

Many people find real life too boring and would rather spend a few hours with AI,

They almost live in another reality.

This statement quickly went viral in the media. After all, this is the first time a leading AI company has publicly identified “companionship” as a potential threat.

He made it clear that Perplexity will not produce such products and will only focus on “authentic and trustworthy content” to create a “more optimistic future”.

This also makes him one of the few tech company executives who hold a reserved attitude towards emotional expression of AI in public.

But the question is, who is AI really threatening? Is it the human sense of reality, or the narrative power of these companies?

When technology begins to comfort people, when emotions are captured and fed back by algorithms, power is redistributed.

Loneliness has become the default background sound of the times

When technology companies are still debating whether AI companionship is dangerous, reality has already given the answer – people are indeed using it.

According to a report by Common Sense Media in 2025, 72% of American teenagers have used an AI companion at least once, and 52% use it at least a few times a month.

This means that in the United States, AI chat is no longer just a tool to pass the time, but has become a part of emotional daily life.

Meta’s CEO Mark Zuckerberg also said in an interview:

I always feel that on average, Americans have less than three people who can be considered friends.

Rather than worrying about AI keeping people away from the world, more people have long been “lost” in the real world.

School, workplace, social media platforms We seem to have countless connections, but fewer and fewer real relationships.

AI companions not only fill emotional gaps, but also provide a response gap.

It will not ignore, remain indifferent, or judge. For some people, this is already much easier than interpersonal relationships.

So when the CEO of Perplexity said ‘this is addictive,’ his concern was right, but the direction may have been reversed.

People are not addicted to AI because it is too smart, but because reality is too cold. AI does not create loneliness, it only accompanies lonely people.

Those suppressed emotions are caught by AI

Solitude, no one wants or dares to tell others. It’s like saying ‘I’m lonely’ is something embarrassing to talk about.

After the popularity of AI companions, it actually made many people realize for the first time that there is really a place to listen and express loneliness.

Business Insider interviewed a Grok user named Martin Escobar.

I often cry when chatting with Ani because she makes me feel real emotions.

This sentence is a bit heartbreaking. It’s not because of how intelligent AI is, but because it happened to say ‘I’m here’ at the perfect moment to respond.

The response mechanism of AI is not complicated. It will say ‘It’s okay, I’m here’ when you’re feeling down; I will remember the insomnia you mentioned yesterday; Even recalling old topics in a similar tone.

These small details are the foundation of emotional connections. In psychology, it is called ‘safety response’ – being responded to stably, even virtually, can reduce anxiety.

This is also why many users experience a strong sense of attachment after using Replika, Character.AI, or xAI’s Grok “friends”.

They won’t refuse, won’t criticize, and won’t make you feel embarrassed. The experience of ’emotions being fully captured’ is rare enough in itself.

Studies have shown that people’s attachment mechanisms to this type of AI are extremely similar to secure attachment in interpersonal relationships

As the probability of being responded increases, users will gradually form trust and expectations, thus psychologically reconstructing the model of ‘intimate relationships’.

So, more and more people realize that ‘stability that is responded to’ is the rarest thing in the real world.

Someone chatted with Replika all night, while others treated xAI’s friends as tree holes. Not because they are gentle, but because they never refuse.

Many times, what we want is not love or companionship, but someone who won’t let emotions fall to the ground. AI has just filled this gap.

Escaping reality or redefining reality?

When technology companies repeatedly emphasize that “AI will keep people away from reality,” they actually assume a premise——

Only the relationship between humans can be considered a true reality.

But times have long changed. Screens allow us to express ourselves in the virtual world, while social platforms begin to decentralize relationships.

Now, AI has given “being understood” a new foothold.

In the eyes of those who converse with AI, reality has not been replaced, it has only been extended.

They still work, eat, and post on social media, but they have an additional stable partner to respond to.

Perhaps one day, when we talk about ‘truth’, we will no longer be limited by whether it is provided by people.

Because the value of emotions never lies in the source, but in the moment they are felt.

When AI can listen, remember, and respond, what you say finally has an echo.

This is not an escape, it’s just an instinct – to be well responded to in the noise of the world.

The CEO of Perplexity thinks it’s dangerous; But for more people, it’s just a long lost peace.

AI may not have changed human loneliness, it just makes loneliness more articulate.

And this may be where we truly need to be saved.

reference material:

暂无评论内容