Information barriers are ubiquitous in daily life. In the family group, the health posts about “thousand rolls of water causing cancer” forwarded by elders are ignored, and the internet meme you shared “has no success” and no one continues it. During a gathering with friends, we talked about international hot topics. One person said, ‘That’s what happened,’ while the other looked confused and said, ‘What I saw was completely different.’.

Nowadays, the discussion of “algorithmic alienation” continues to ferment on Chinese social networks, from intimate relationships to public discussions, and algorithms seem to have become the “culprits” of all estrangement and opposition.

At the end of 2024, ‘brainrot’ was selected as the Oxford University Press Word of the Year. Domestic marketing accounts quickly used their imagination to deeply link it with short videos and algorithms, and even created a new concept of “fool resonance” to depict collective anxiety, which has continued to ferment this year. But the core of this anxiety ultimately cannot escape the high-frequency vocabulary “information cocoon” on social networks.

We default to algorithms trapping ourselves in homogeneous information, ultimately leading to polarized viewpoints and estranged relationships. But is this really the case? Does this’ information cocoon ‘, which is considered a ferocious beast, really exist? And is it really caused by the algorithm alone?

The academic community at home and abroad has conducted empirical research on this issue and reached anti common sense conclusions. Researchers conducted simulation experiments and found that ‘algorithmic recommendations are more diverse than what we choose independently’, and large sample user survey data also showed that the ‘information cocoon’ was exaggerated.

Compared to the pre algorithmic era, the amount of information we were exposed to in a day was probably only accessible to ancient people in a year. Sometimes we even need to proactively create a ‘cocoon’ for ourselves to cope with this era of information overload.

You may just want to hide in the ‘cocoon room’ for a while

On social media, a young man shared his cognitive transformation towards the “information cocoon”: initially, he was worried that long-term “information cannibalism” would weaken his understanding, and he was extremely anxious about it. But as his life experience increased, he gradually realized that this concern was more like a ‘fear of the future in advance’. We have always lived in reality, which is fluid and interwoven. The amount of information we consume far exceeds that of a cocoon. “This realization subtly reveals the truth about the” cocoon “behind it.

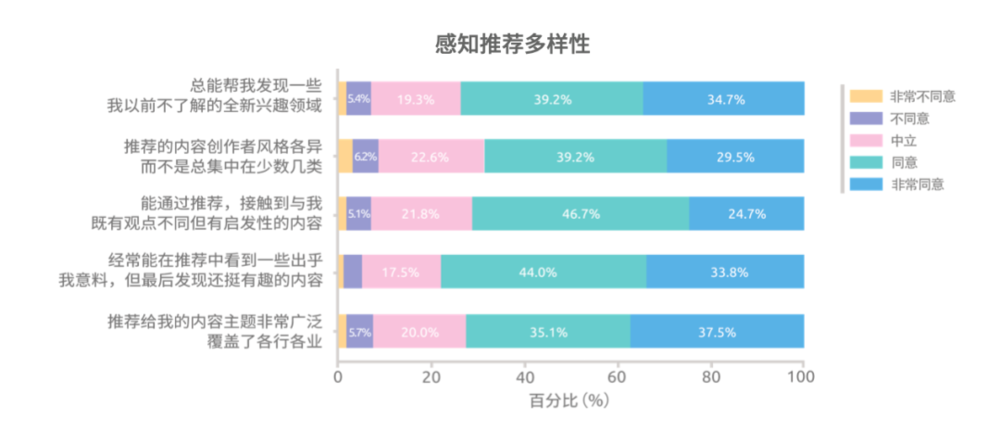

On November 7th, the Urban Communication Innovation Research Center of Shenzhen University released the “Algorithm Practice and ‘Breaking the Cocoon’ Report for Short Video Users” (referred to as the Shenzhen University “Report”), providing us with new data support. The research team conducted a questionnaire survey on 1215 users, conducted in-depth interviews with over 20 users, and simulated “proxy experiments” with different user behaviors. The results show that the majority of users recognize the diversity of recommended content and indicate that the algorithm can help them broaden their horizons.

Algorithm Practice and “Cocoon Breaking” Report for Short Video Users

The attitude of users towards algorithm recommendations tends to be rational: 62.5% of users believe that it has “advantages and disadvantages, depending on how the platform and individuals use it”, 25.3% of users hold a positive attitude, and only 8.6% of users believe that it “will limit their field of view”. Through these data, it can be seen that the so-called ‘trapped in an information cocoon’ is actually somewhat exaggerated.

From a behavioral perspective, when the weight of reality leaves one breathless, actively choosing familiar and relaxed content is more like a survival strategy for human self-protection. Nowadays, with the increasing pressure of work and rising social costs, most people are accompanied by wages, rent, internal conflicts, and loneliness every day. After a tiring day of work, we no longer have the extra energy to understand a completely different world and empathize with those lives that have no intersection with us. At this moment, the screen of the phone is the resting place for the soul after a busy day. For the high-density information pushed by algorithms, people just want to swipe over quickly, relax and hide in the “information comfort zone” for a while.

A netizen put it bluntly: “I’m not unaware that the world has a complex side, but on the subway after work, I don’t want to watch those anxious analyses anymore. I just want to watch a few cooking videos and pet daily routines to relax myself.” This choice is not about algorithmic “manipulation,” but a necessary choice under real pressure. People actively choose easy content, but see algorithmic recommendations as the culprit that ‘traps us’.

When we discuss the dangers of the ‘information cocoon’, we actually overlook an important premise: only when you have enough time, energy, and resources to explore the vast world, can you possibly worry about being limited by information. However, the reality is that our scope of activities may be limited to the two points and one line between home and company, our social circle is fixed between colleagues and relatives, and our career choices are limited by education, region, and family background. These real-life ‘social cocoons’ are far more capable of determining our cognitive boundaries than the content recommended by algorithms. In fact, the content recommended by algorithms is far more diverse than our social circles.

When we talk about the ‘information cocoon’, we may be using a technical term to express a deeper sense of social powerlessness – we find it difficult to change our careers and life situations, constantly anxious about missing important information and being left behind by the times, so we project this sense of powerlessness onto algorithms, accusing technology of limiting our vision. However, technology cannot speak, and has become everyone’s’ punching bag ‘.

However, what needs to be clear is that pointing the finger entirely at technology actually avoids the complexity of the real information environment and erases the possibility of us getting along with it calmly. At the end of the day, algorithms are just tools for humans to process information. Tools are pathways rather than ends, and it is ourselves who decide where we ultimately arrive.

Algorithms have taken on too many responsibilities they shouldn’t have

Is the ‘information cocoon’ caused by algorithms? To clarify the relationship between algorithms and the “information cocoon”, it is necessary to first break the cognitive misconceptions surrounding the construction of marketing accounts.

Regarding the rendering of “brain corruption” on the internet, the original definition by Oxford University Press did not specifically refer to any single media product, nor did it directly link it to algorithms. But in the domestic public opinion field, this concept is simplified as “flashing short videos=brain degeneration”, and algorithms have become the “initiators” behind it.

In the era of social media, we may all clearly feel that conflicts on the internet are often more intense and extreme than in daily life. The ideological barriers and differences in positions have led to the emergence of many “trolls” and “keyboard warriors” on the internet, resulting in the so-called “polarization phenomenon”. This has even become a global issue.

Western scholars have proposed that while digital technology brings convenient customized information to individuals, it also allows individuals to live in their own created “echo chambers”. Due to the lack of access to opposing information and viewpoints, people tend to rationalize their own views and become increasingly extreme. Among these theories, the most familiar to Chinese people is the concept of “information cocoon” proposed by Harvard jurist Keith Sunstein in “The Republic of the Internet”.

In fact, supporters of the “echo chamber” effect have an unproven assumption that as long as people are exposed to diverse information, the so-called “information cocoon” will be eliminated and polarization will be reduced. But is that really the case? A netizen’s comment is thought-provoking: “I would also actively try to break out of the so-called information cocoon, but after looking at the information on the opposite side, I found that it is still comfortable in my own cocoon.

Chris Bell, a sociologist at Duke University and professor at the Political Polarization Laboratory, also raised this question in his book “Breaking the Prism of Social Media”: If individuals are exposed to opposing views on social media, will it help them reflect on their own views and become less extreme? Is polarization an inevitable direction of group communication or a situational result that only occurs under certain technological conditions?

In the preface of his book “Breaking the Prism of Social Media,” Chinese communication scholar Liu Hailong further reveals the truth about “polarization”: stepping out of the information cocoon and encountering opposing viewpoints does not make people become gentle and rational, but may instead make their positions more extreme.

The real research data also debunks the simple assertion that “algorithms lead to viewpoint polarization”. A research team from the University of Amsterdam conducted a highly convincing experiment: they used a large language model to generate 500 “personalized” chatbots and built a minimalist social platform without advertisements or personalized recommendation algorithms, retaining only three basic functions: posting, reposting, and following.

After 50000 free interactions, the experiment yielded intriguing results: robots with similar positions automatically paid attention to each other, while those with different positions had almost no intersection, and the centrists were marginalized; A few “big V” accounts monopolize the majority of fans and reposts, with severely uneven attention distribution; The clearer or even more extreme the stance, the faster and wider the spread of content.

This experiment proves that in the absence of algorithms, chaos such as echo chambers, concentration of influence, and extreme sound amplification still exist, all of which are natural tendencies rooted in human social behavior, rather than viewpoint polarization caused by recommendation algorithms.

In Liu Hailong’s view, the greatest alienation of people through social media is not the creation of information barriers, but the distortion of self-identity – extreme positions can bring a strong sense of belonging, recognition, and even potential status and income. This temptation makes some people actively embrace opposition, which is different from our subconscious perception of being passively trapped in a “cocoon room”.

The domestic public opinion field simply attributes “brain corruption” and “polarization of viewpoints” to algorithms, which essentially involves changing concepts and creating technological panic. The phenomenon of “fool resonance” that has been hotly debated has even reversed causality – the political differences and class distinctions in real society, as well as the limitations of human cognition, are the root cause of opinion fragmentation. And algorithms, more importantly, objectively present this kind of division, rather than creating it.

Overall, humans are naturally inclined to seek out groups with similar viewpoints, and this social instinct predates the emergence of algorithms. In the era without the Internet, regions, classes and professions have already built a natural “information sphere” for people. Attributing the solidification of circles and opposing viewpoints in reality solely to algorithms is essentially seeking a simple “technological scapegoat” for complex social problems, while avoiding the deeper social structure and human nature behind them.

You are more proactive than you imagine

The reporter observed that in the “Report” of Shenzhen University, there is also an intriguing set of survey data:

When faced with views that are completely opposite to their own, as many as 86.6% of users choose to watch, comment, and interact;

65.2% of users will actively try new things when the content is monotonous;

More than 60% of users have the willingness and behavior to actively seek different information and resist narrowing their horizons, such as “obtaining information through different platforms and fully understanding the process of events”;

75.6% of users believe that they can influence algorithms through behaviors such as searching, liking, and commenting.

These data fully demonstrate that users are not passively trapped in the “information cocoon”, but rather “active information managers”. They will actively construct their information environment by combining positive exploration (active search, cross platform verification) with reverse filtering (marking disinterested, blocking content).

As one of the interviewees said, “I know that liking certain content will be continuously recommended, so now I consciously control the liking behavior. After reading the content I like, I just swipe away, just to let the algorithm push me more diverse content.” This statement is very important, indicating that users are far more proactive than we imagine.

Many people may still be puzzled, even though they are actively filtering content and can see a large amount of different content in algorithm recommendations, why do they still feel trapped in the “information cocoon”? In response to this, Professor Shi Wen and Professor Li Jinhui from the School of Journalism and Communication at Jinan University provided answers in their research on virtual proxy testing ABT.

This study used a domestic algorithm short video platform as the data source to explore the dynamic changes in news diversity under the interaction between algorithms and user behavior. The research results indicate that compared to random recommendations without algorithms, algorithm driven personalized news consumption presents higher diversity of news categories, and algorithm recommendations are more effective in promoting our access to news content on different topics than users’ autonomous choices.

It seems that the algorithm has already included mechanisms to combat “cocoon houses” in its design. The diversity of algorithm recommendations is even higher than when “personalized recommendations are turned off”. The reason why we still feel trapped by algorithms is essentially because human attention mechanisms and memory preferences are at work. As we mentioned earlier, extreme content has gained “cognitive privilege” due to its stimulating nature, making it easier for us to remember and magnify it; And those mundane daily contents (cooking, scenery, science popularization), although occupying the majority of the recommendation stream, are also easily filtered and forgotten by us automatically.

When we complain about ‘algorithms creating group opposition’, our own attention preference is actually filtering and amplifying individual extreme signals, and the algorithm only faithfully executes the recommendation logic. However, some people may also want to ask, isn’t there a contradiction between an individual’s will and the dominance of algorithms? To what extent should algorithms respect the individual will of users? Imagine if the algorithm gives up its dominance, it may also trap users who want to isolate themselves in the depths of a ‘cocoon’.

Of course, this may be a philosophical question about how life exists. But returning to our individual feelings, when it comes to algorithms, a peaceful mindset is actually more important. True information literacy may begin with realizing one’s cognitive biases and actively focusing on ordinary content that is “non stimulating” but forms the foundation of the world. Simply put, algorithms are just tools, a mirror. What a mirror reflects depends entirely on the person standing in front of it and the real life that shapes that person.

We may not be able to immediately push away the weight of life, but at least we can let go of our unfounded panic about technology. There is no need to worry about the “information cocoon”, because our true “battlefield” is outside of information, in the tangible and subtle life of every day. Instead of worrying about being domesticated by algorithms, it’s better to think about how to pry open up the possibility of change where we can.

暂无评论内容